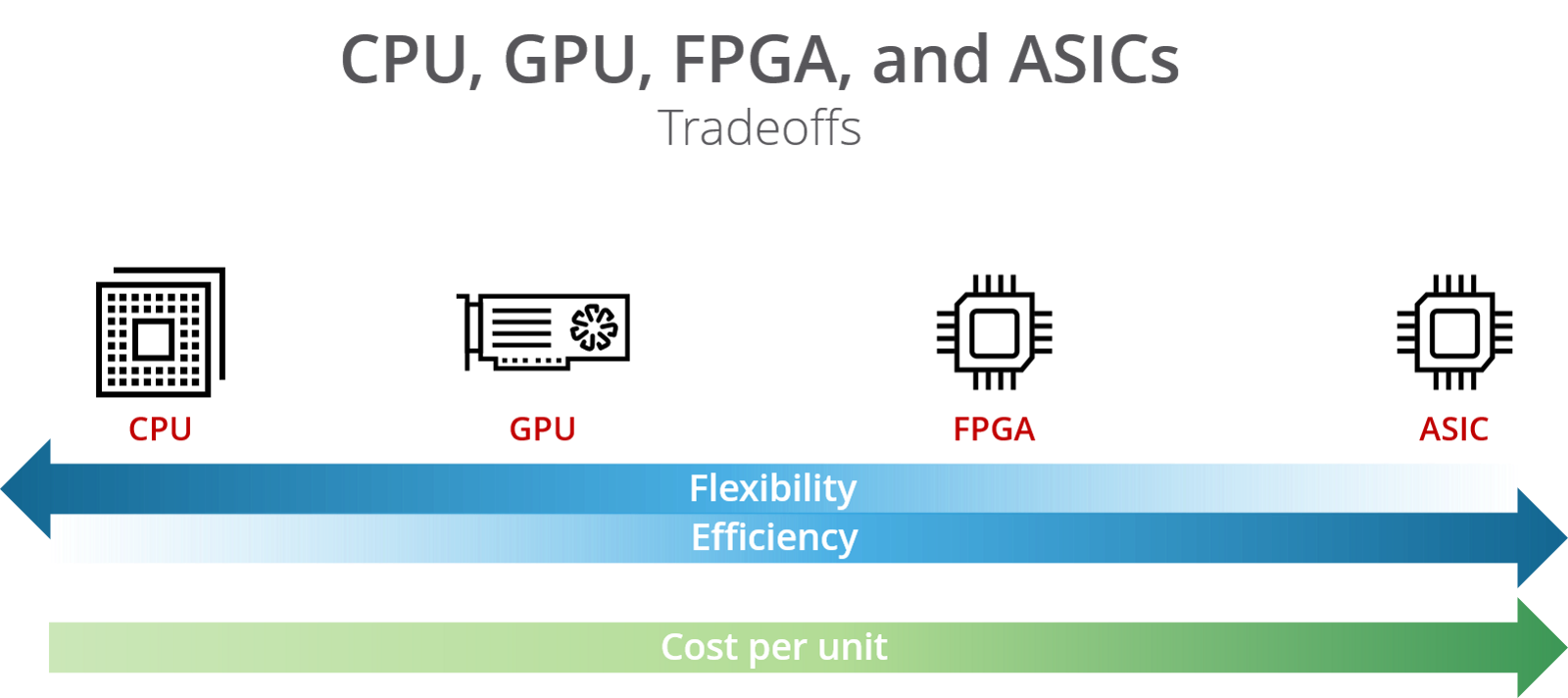

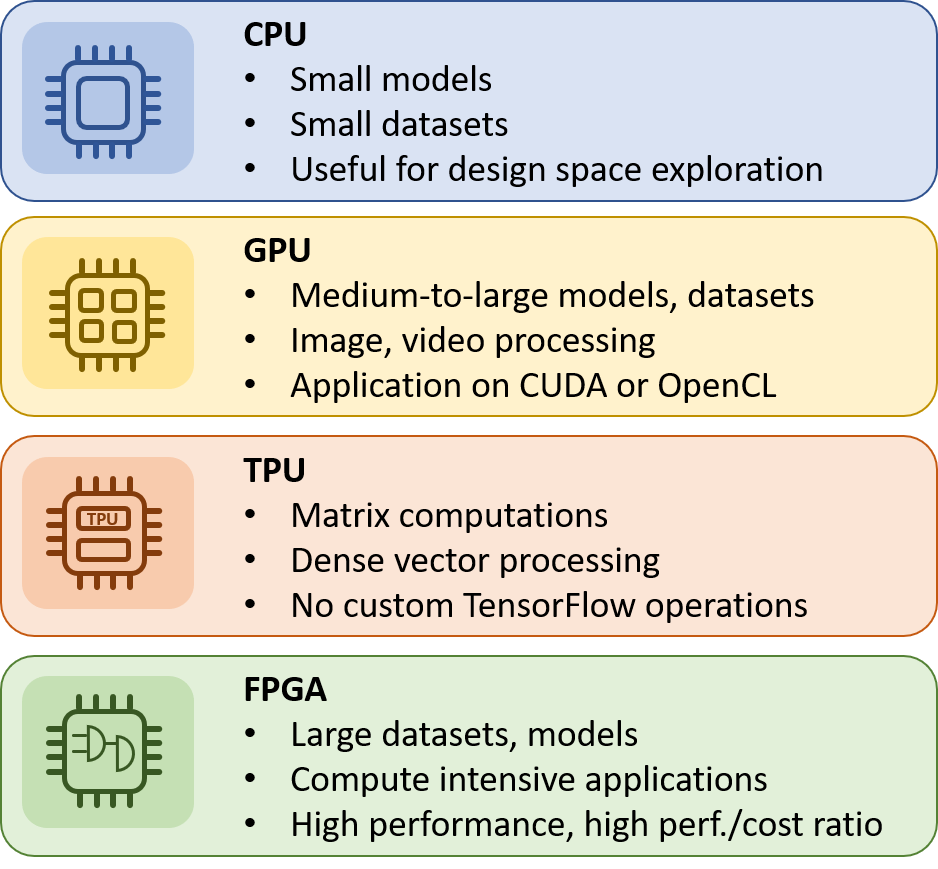

FPGA vs CPU vs GPU vs Microcontroller: How Do They Fit into the Processing Jigsaw Puzzle? | Arrow.com

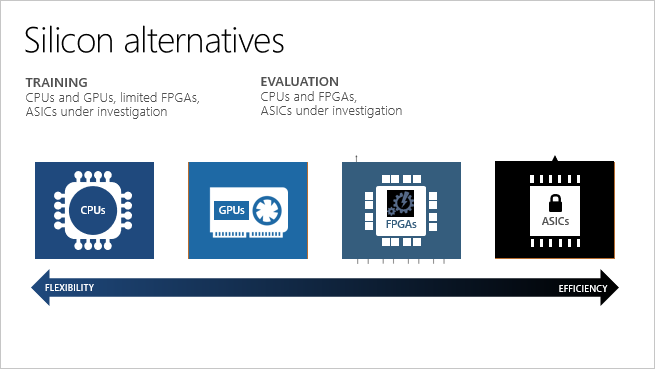

AI Accelerators and Machine Learning Algorithms: Co-Design and Evolution | by Shashank Prasanna | Aug, 2022 | Towards Data Science

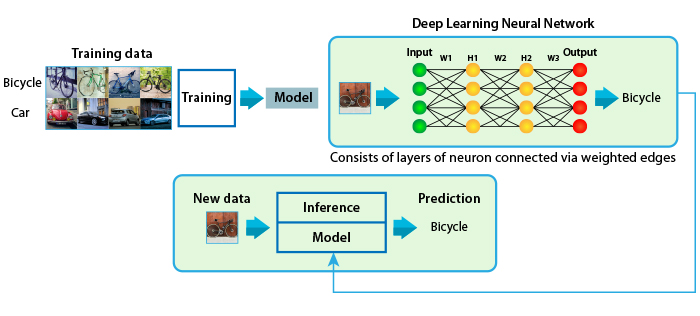

CPU, GPU or FPGA: Performance evaluation of cloud computing platforms for Machine Learning training – InAccel

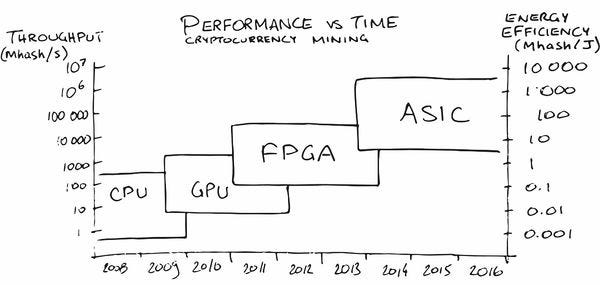

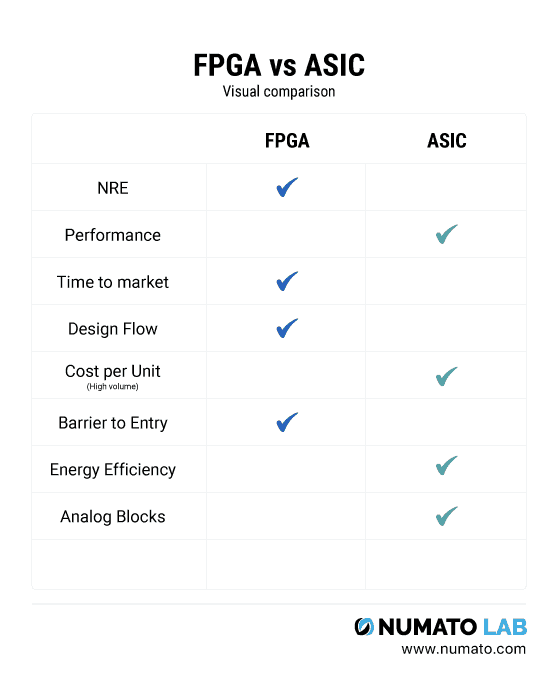

Cryptocurrency Mining: Why Use FPGA for Mining? FPGA vs GPU vs ASIC Explained | by FPGA Guide | FPGA Mining | Medium

Cryptocurrency Mining: Why Use FPGA for Mining? FPGA vs GPU vs ASIC Explained | by FPGA Guide | FPGA Mining | Medium

![AI Computing Chip Analysis for Software-Defined Vehicles [BLOG] | News | ECOTRON AI Computing Chip Analysis for Software-Defined Vehicles [BLOG] | News | ECOTRON](https://ecotron.ai/wp-content/uploads/2022/02/img1.jpg)